Helping enterprises leverage AI for a data-driven edge

We’ll transform your data into a competitive advantage. Since 2016, BigHub has been helping enterprises consult and develop AI strategies, handle data engineering, and launch custom AI solutions.

.avif)

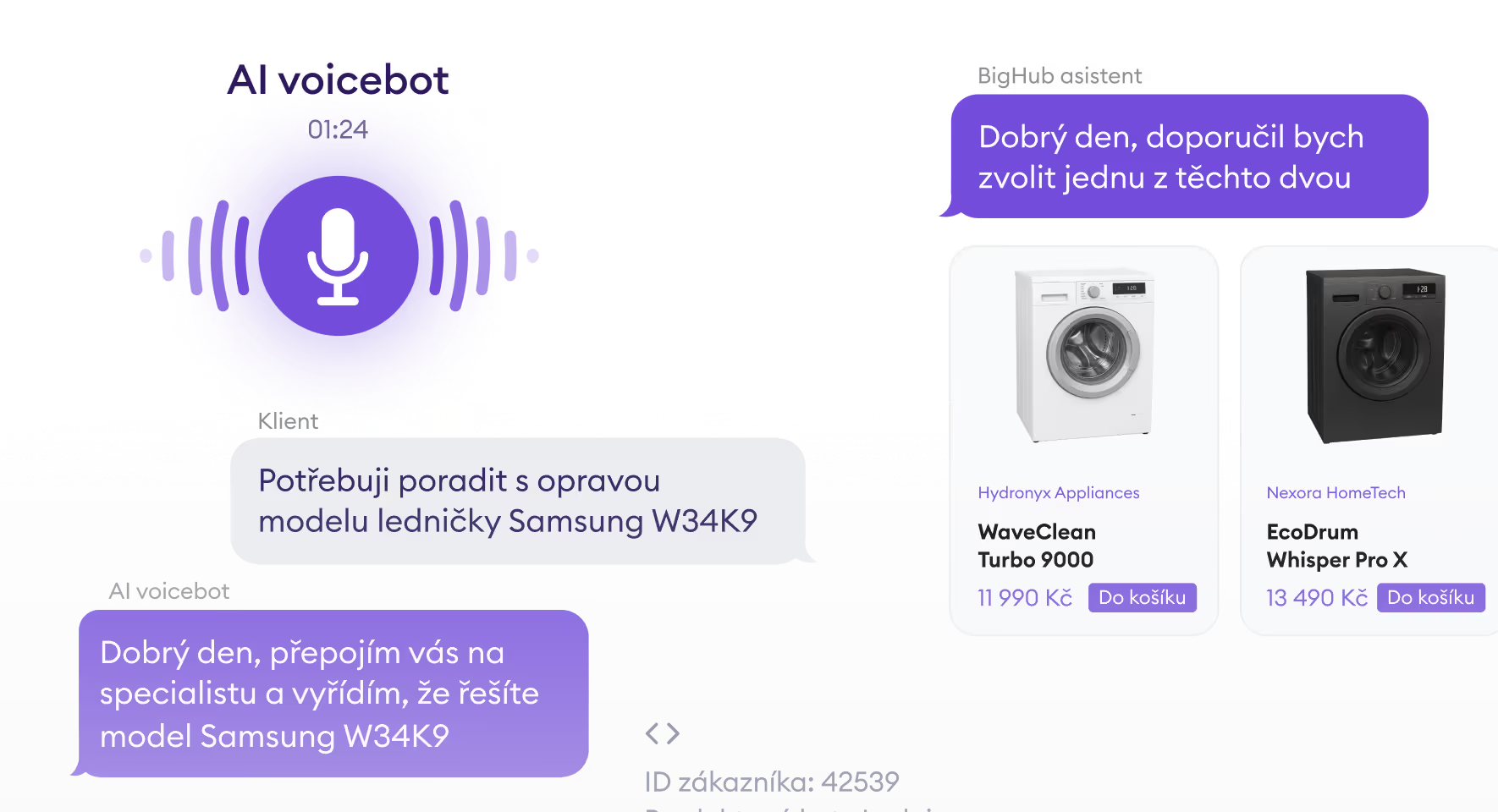

Tailor made AI applications

Looking for a custom AI solution? We provide end-to-end services with expertise in applied AI, including Gen AI features like knowledge bases and assistants. Our experts also specialize in machine learning for demand forecasting, cross-selling/upselling etc.

AI strategy and consulting

BigHub is your consulting and delivery partner for enterprise AI. We work with your teams to identify the highest-value use cases, assess feasibility and ROI, and define a tailored AI roadmap that turns opportunities into measurable business results. From there, we can support implementation end-to-end—covering data readiness, governance, and production rollout. We also manage EU AI Act compliance on your behalf, including risk assessment, documentation, and the controls needed to deploy AI responsibly.

Data engineering services

What if you could have cost-effective, scalable solutions that grow with your business? We specialize in Enterprise data platforms, cloud infrastructure optimization, and strengthening Data engineering capabilities.

AI's impact on your business is what matters to us

The uniqueness of collaborating with BigHub.

Business first

Data & AI as Software

Long-term partner

Specializing in AI solutions across industries

Explore the specific challenges we resolve for clients across diverse sectors.

What clients value about BigHub

Read feedback from our trusted business partners.

Discover how BigHub transforms businesses with AI in a wide range of fields

Actions speak louder than words. Explore tangible examples of our solutions.

Partners and certifications

Leveraging these partners, technologies, and certifications, we empower businesses to transform data into a competitive advantage.

Proud of our public success

What the media says about BigHub and who has recognized us.

"Czech BigHub isn’t afraid of big data or big challenges."

BigHub, established in 2016, specializes in cutting-edge data technologies, helps to innovate companies across all industries.

"ČEZ joins forces with BigHub to harness the power of AI."

BigHub was born when corporations failed to harness AI — now it’s one of the region’s fastest-growing companies.

Get your first consultation free

Want to discuss the details with us? Fill out the short form below. We’ll get in touch shortly to schedule your free, no-obligation consultation.

.avif)

News from the world of BigHub and AI

We’ve packed valuable insights into articles — don’t miss out.

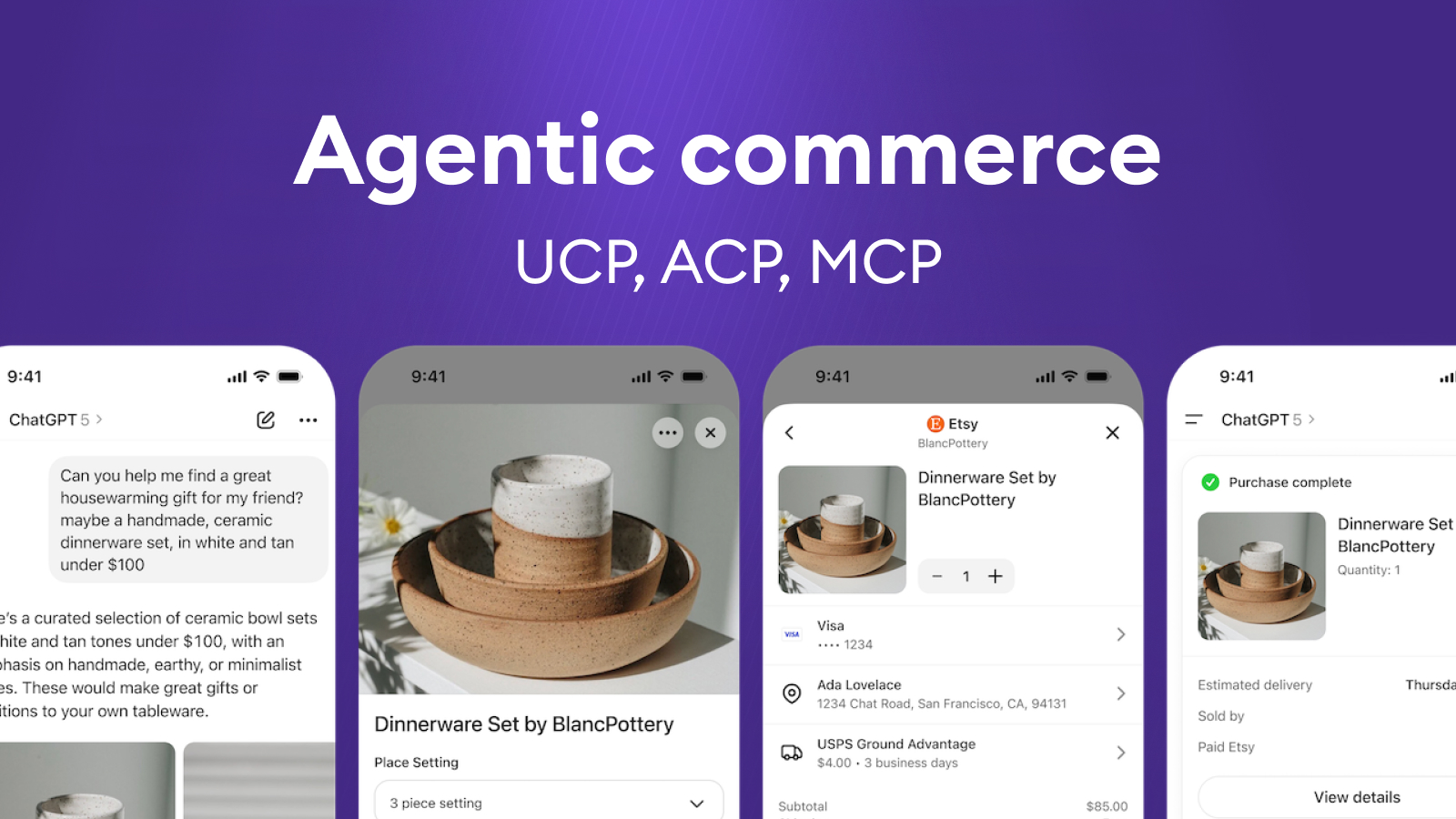

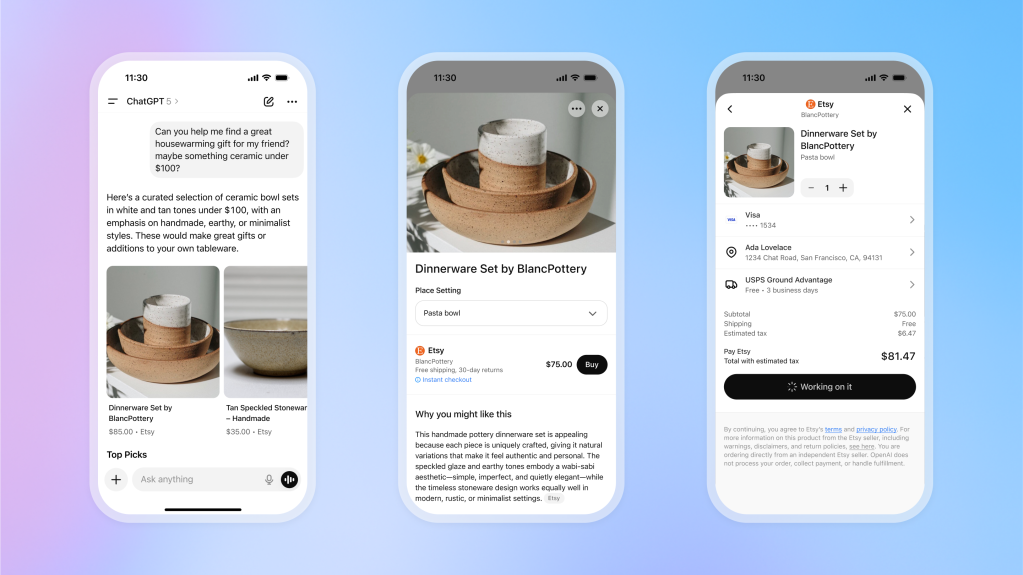

UCP, ACP, MCP in Agentic Commerce: AI is moving from “recommending” to “doing”

Where are we now

Over the last few months, several important things have happened in AI and e-commerce:

- Google introduced UCP (Universal Commerce Protocol) – an open standard for agentic commerce that unifies how an AI agent talks to a merchant: catalogue, cart, shipping, payment, order.

- OpenAI and Stripe launched ACP (Agentic Commerce Protocol) – a protocol for agentic checkout in the ChatGPT ecosystem.

- At the same time, MCP (Model Context Protocol) has emerged as a general way for agents to call tools and services, and OpenAI Apps SDK as a product/distribution layer for agent apps.

In other words, the internet is starting to define standardised “rails” for how AI agents will shop. And the market is shifting from “AI recommends” to “AI actually executes the transaction”.

In this article, we look at:

- what agentic commerce means in practice,

- how UCP, MCP, Apps SDK and ACP fit together,

- what these standards solve – and what they very intentionally don’t solve,

- and where custom agentic commerce makes sense – the exact type of work we do at BigHub.

What Is Agentic commerce

Agentic commerce is a shopping flow where an AI agent handles part or all of the process on behalf of a person or a business – from discovery and comparison to payment.

A typical scenario:

“Find me marathon running shoes under $150 that can be delivered within two weeks.”

The agent then:

- understands the request,

- queries multiple merchants,

- compares parameters, reviews, prices and delivery options,

- builds a shortlist,

- and, once the user approves, completes the purchase – ideally without the user ever touching a traditional web checkout.

This doesn’t only apply to B2C. Similar patterns show up in:

- internal procurement,

- B2B ordering,

- recurring replenishment,

- service and returns flows.

The direction is clear, AI is moving from “help me choose” to “get it done for me”.

MCP, Apps SDK, UCP and ACP

It’s useful to see today’s stack as layers.

MCP (Model Context Protocol) is:

- a general standard for how an agent calls tools, APIs and services,

- domain-agnostic (“I can talk to CRM, pricing, catalogue, ERP, …”),

- effectively the way the agent “sees” the world – through capabilities it can invoke.

In short: MCP = how the agent reaches into your systems.

OpenAI Apps SDK:

- provides UI, runtime and distribution for agents (ChatGPT Apps, user-facing interface),

- lets you quickly wrap an agent into a usable product:

- chat, forms, actions,

- distribution inside the ChatGPT ecosystem,

- basic management and execution.

In short: Apps SDK = how you turn an agent into a product people actually use.

UCP – Domain standard for commerce workflows

UCP (Universal Commerce Protocol) from Google and partners:

- is a domain-specific standard for commerce,

- unifies how an agent talks to a merchant about:

- catalogue, variants, prices,

- cart, shipping, payment, order,

- discounts, loyalty, refunds, tracking and support,

- is designed to work across Google Search, Gemini and other AI surfaces.

In short: UCP = the concrete language and workflow of buying.

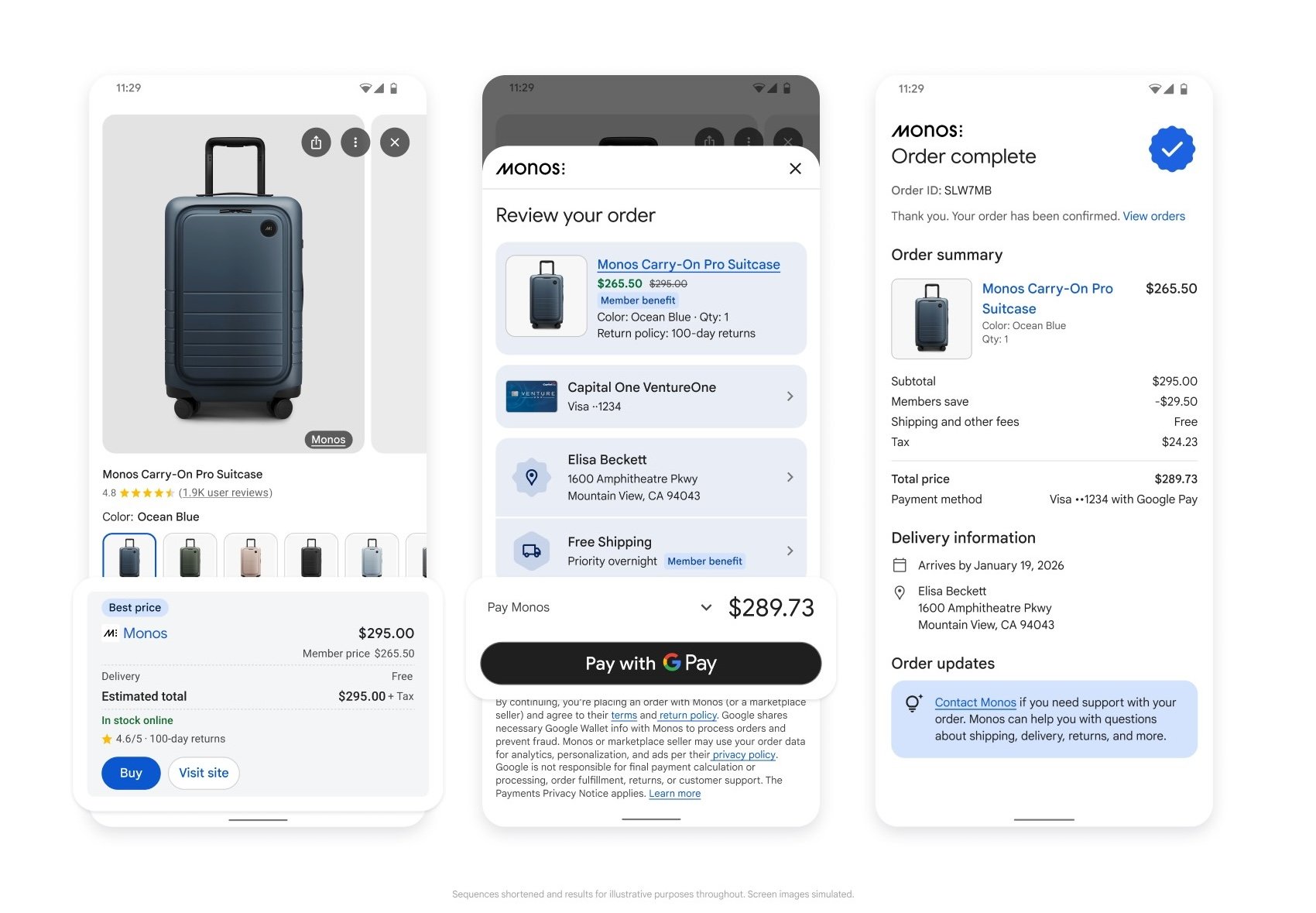

ACP – Agentic checkout in the ChatGPT ecosystem

ACP (Agentic Commerce Protocol) from OpenAI/Stripe:

- targets a similar domain from the ChatGPT side,

- focuses strongly on checkout, payments and orders,

- powers features like Instant Checkout in ChatGPT.

From a merchant’s point of view, UCP and ACP are competing commerce standards (no one wants three different protocols in their stack).

From an architecture point of view, they can coexist as different dialects an agent uses depending on the channel (ChatGPT vs. Google / Gemini).

What these standards do – and what they don’t

The common pattern is important. UCP and ACP do not make agents “smart”. They just give them a consistent language.

These standards typically cover:

- how the agent formally communicates with the merchant and checkout,

- how offers and orders are structured,

- how payment and authorisation are handled securely,

- how a purchase can flow across different AI channels.

They do not (and cannot) solve:

- the quality and structure of your product catalogue, attributes and availability,

- integration into ERP, WMS/OMS, CRM, loyalty, pricing engine, campaign tooling,

- your business logic – margin vs. SLA vs. customer experience vs. revenue,

- governance, risk, approvals – who is allowed to order what, when a human must step in, how decisions are audited.

Practically, this means:

- you can be formally “UCP/ACP-ready”,

- and still deliver a poor agent experience if:

- data is inconsistent,

- delivery promises can’t be kept,

- pricing and promo logic breaks in a multi-channel world,

- the agent has no access to real-time states and internal rules.

The standard is a necessary technical minimum, not a finished solution.

How we approach Agentic Commerce at BigHub

At BigHub, we see UCP, MCP, ACP and Apps SDK as infrastructure building blocks. On real projects, we focus on what creates actual competitive advantage on top of them.

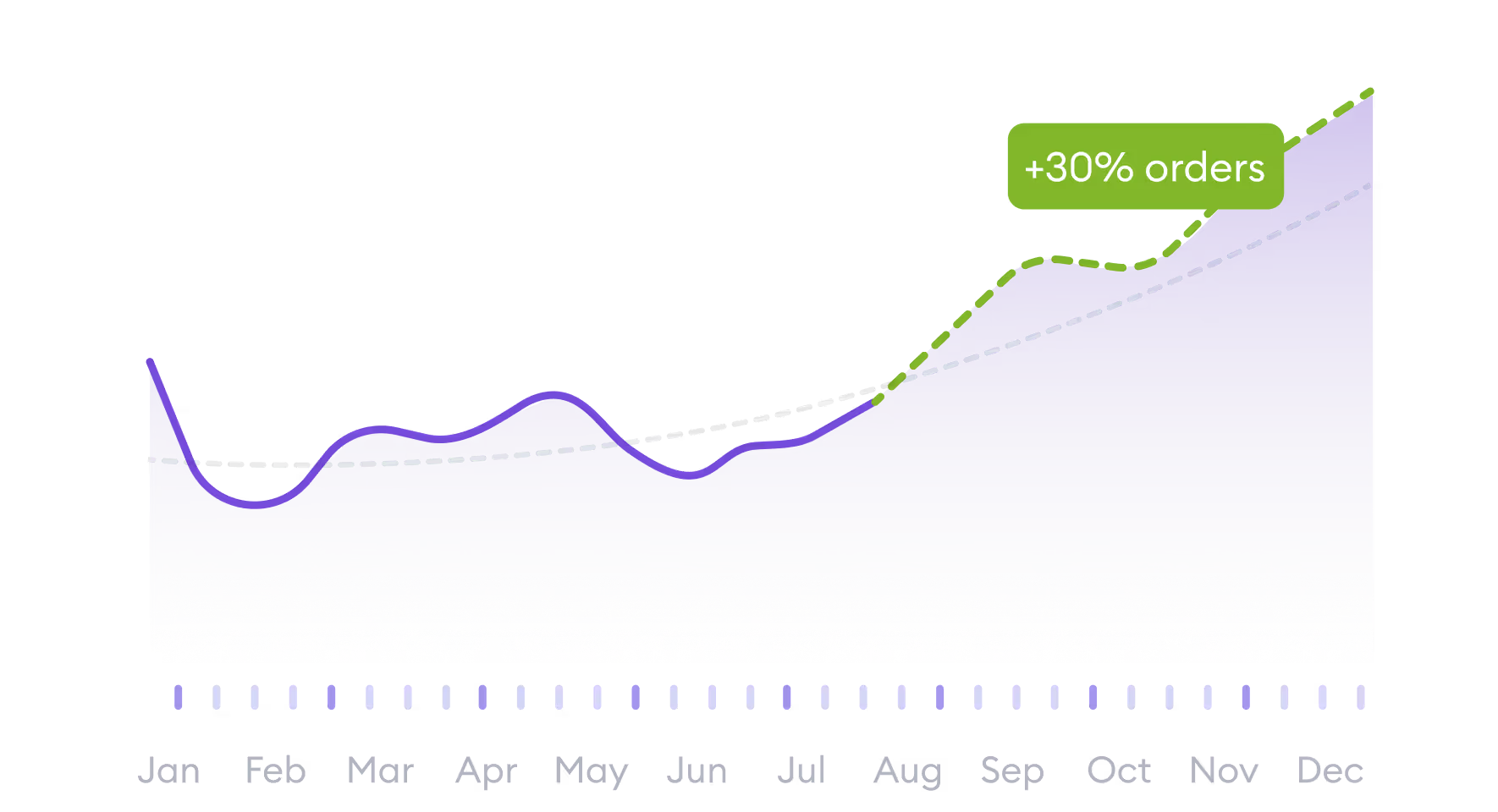

We build ML-powered commerce agents that can:

- optimise dynamic offers and pricing (bundles, alternatives, smart trade-offs based on margin, SLA and priorities),

- deliver personalised search and shortlists (customer context, preferences, budget, interaction history),

- handle argumentation and objections (why this option, what are the alternatives, explain the trade-offs),

- and only then smoothly push the checkout over the finish line.

On top of that, we add an integration layer via MCP (capabilities + connections to core systems). For UI and distribution, we often use OpenAI Apps SDK when we need to get an agent in front of real users quickly. Where it makes sense, we plug into standards like UCP/ACP instead of writing bespoke integrations for every single channel.

Where custom Agentic Commerce makes the difference

Standards (UCP/ACP/MCP) are extremely valuable where:

- you don’t want to invent your own protocol for connecting to AI channels,

- you need interoperability (ChatGPT, Google/Gemini, others),

- you want to reduce integration overhead for merchants.

A custom approach adds the most value in these areas:

1) Connecting the agent to core systems

- ERP, WMS/OMS, CRM, loyalty, pricing, returns, contact centre…

- the agent must live in your real operational architecture, not a demo sandbox.

Typically you need a dedicated integration and orchestration layer that:

- speaks UCP/ACP/MCP “upwards”,

- speaks your specific systems and APIs “downwards”.

2) Domain logic and business Rules

This is where competitive advantage is created:

- when the agent can execute autonomously vs. when it should only recommend,

- how it balances margin, SLA, availability, customer experience and revenue,

- how it works with promotions, loyalty, cross-sell / up-sell scenarios.

This is not a protocol question. It’s about concrete rules on top of your data and KPIs.

3) Multi-channel and the mix of B2C / B2B / Internal agents

Real-world commerce looks like this:

- B2C webshop,

- B2B ordering portal,

- internal purchasing agent,

- in-store sales assistant,

- customer service agent.

A custom framework lets you:

- share logic across roles and channels,

- respect permissions and limits,

- support flows like “AI starts in chat, finishes in the store”.

4) European context: Regulation, security, Data residency

For European companies, several constraints matter:

- regulation (EU AI Act, GDPR, sector-specific rules),

- internal security posture, audits, risk controls,

- where data and models actually run (US vs. EU),

- how explainable and auditable agent decisions are.

Standards are global, but architecture and governance have to be local and tailored.

What retailers and enterprises should take away from UCP (and other similar protocols)

If you’re thinking about agentic commerce, it’s worth asking a few practical questions:

- Are we “agent-ready” not only at the protocol level, but also in terms of data and processes?

- In which use cases do we actually want the agent to execute the transaction – and where should it stay at the recommendation level?

- How will agentic commerce fit into our existing systems, pricing, campaigns and SLAs?

- Who owns agent initiatives internally (KPI, P&L) and how will we measure success?

- Which parts make sense to solve via standards (UCP/ACP/MCP) and where do we already need a custom agent framework?

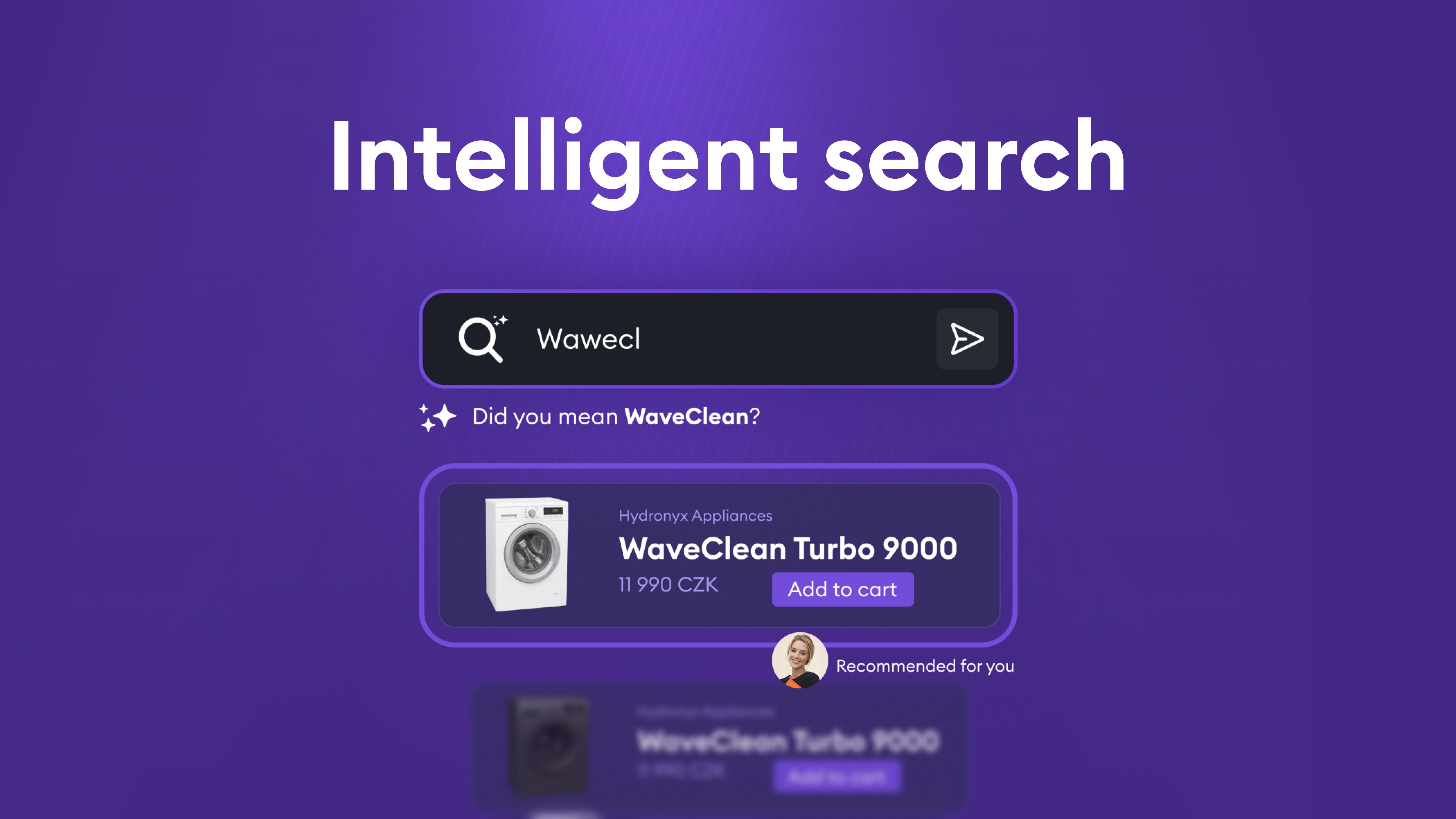

How to build intelligent search: From full-text to optimized hybrid search

The problem: Limits of traditional search

Classic full-text search based on algorithms like BM25 has several fundamental constraints:

1. Typos and variants

- Users frequently submit queries with typos or alternate spellings.

- Traditional search expects exact or near-exact text matches.

2. Title-only searching

- Full-text search often targets specific fields (e.g., product or entity name).

- If relevant information lives in a description or related entities, the system may miss it.

3. Missing semantic understanding

- The system doesn’t understand synonyms or related concepts.

- A query for “car” won’t find “automobile” or “vehicle,” even though they are the same concept.

- Cross-lingual search is nearly impossible—a Czech query won’t retrieve English results.

4. Contextual search

- Users often search by context, not exact names.

- For example, “products by manufacturer X” should return all relevant products, even if the manufacturer name isn’t explicitly in the query.

The solution: Hybrid search with embeddings

The remedy is to combine two approaches: traditional full-text search (BM25) and vector embeddings for semantic search.

Vector embeddings for semantic understanding

Vector embeddings map text into a multi-dimensional space where semantically similar meanings sit close together. This enables:

- Meaning-based retrieval: A query like “notebook” can match “laptop,” “portable computer,” or related concepts.

- Cross-lingual search: A Czech query can find English results if they share meaning.

- Contextual search: The system captures relationships between entities and concepts.

- Whole-content search: Embeddings can represent the entire document, not just the title.

Why embeddings alone are not enough

Embeddings are powerful, but not sufficient on their own:

- Typos: Small character changes can produce very different embeddings.

- Exact matches: Sometimes we need precise string matching, where full-text excels.

- Performance: Vector search can be slower than optimized full-text indexes.

A hybrid approach: BM25 + HNSW

The ideal solution blends both:

- BM25 (Best Matching 25): A classic full-text algorithm that excels at exact matches and handling typos.

- HNSW (Hierarchical Navigable Small World): An efficient nearest-neighbor algorithm for fast vector search.

Combining them yields the best of both worlds: the precision of full-text for exact matches and the semantic understanding of embeddings for contextual queries.

The challenge: Getting the ranking right

Finding relevant candidates is only step one. Equally important is ranking them well. Users typically click the first few results; poor ordering undermines usefulness.

Why simple “Sort by” is not enough

Sorting by a single criterion (e.g., date) fails because multiple factors matter simultaneously:

- Relevance: How well the result matches the query (from both full-text and vector signals).

- Business value: Items with higher margin may deserve a boost.

- Freshness: Newer items are often more relevant.

- Popularity: Frequently chosen items may be more interesting to users

Scoring functions: Combining multiple signals

Instead of a simple sort, you need a composite scoring system that blends:

- Full-text score: How well BM25 matches the query.

- Vector distance: Semantic similarity from embeddings.

- Scoring functions, such as:

- Magnitude functions for margin/popularity (higher value → higher score).

- Freshness functions for time (newer → higher score).

- Other business metrics as needed.

The final score is a weighted combination of these signals. The hard part is that the right weights are not obvious—you must find them experimentally.

Hyperparameter search: Finding optimal weights

Tuning weights for full-text, vector embeddings, and scoring functions is critical to result quality. We use hyperparameter search to do this systematically.

Building a test dataset

A good test set is the foundation of successful hyperparameter search. We assemble a corpus of queries where we know the ideal outcomes:

- Reference results: For each test query, a list of expected results in the right order.

- Annotations: Each result labeled relevant/non-relevant, optionally with priority.

- Representative coverage: Include diverse query types (exact matches, synonyms, typos, contextual queries).

Metrics for quality evaluation

To objectively judge quality, we compare actual results to references using standard metrics:

1. Recall (completeness)

- Do results include everything they should?

- Are all relevant items present?

2. Ranking quality (ordering)

- Are results in the correct order?

- Are the most relevant results at the top?

Common metrics include NDCG (Normalized Discounted Cumulative Gain), which captures both completeness and ordering. Other useful metrics are Precision@K (how many relevant items in the top K positions) and MRR (Mean Reciprocal Rank), which measures the position of the first relevant result.

Iterative optimization

Hyperparameter search proceeds iteratively:

- Set initial weights: Start with sensible defaults.

- Test combinations: Systematically vary:

- Field weights for full-text (e.g., product title vs. description).

- Weights for vector fields (embeddings from different document parts).

- Boosts for scoring functions (margin, recency, popularity).

- Aggregation functions (how to combine scoring functions).

- Evaluate: Run the test dataset for each combination and compute metrics.

- Select the best: Choose the parameter set with the strongest metrics.

- Refine: Narrow around the best region and repeat as needed.

This can be time-consuming, but it’s essential for optimal results. Automation lets you test hundreds or thousands of combinations to find the best.

Monitoring and continuous improvement

Even after tuning, ongoing monitoring and iteration are crucial.

Tracking user behavior

A key signal is whether users click the results they’re shown. If they skip the first result and click the third or fourth, your ranking likely needs work.

Track:

- CTR (Click-through rate): How often users click.

- Click position: Which rank gets the click (ideally the top results).

- No-click queries: Queries with zero clicks may indicate poor results.

Analyzing problem cases

When you find queries where users avoid the top results:

- Log these cases: Save the query, returned results, and the clicked position.

- Diagnose: Why did the system rank poorly? Missing relevant items? Wrong ordering?

- Augment the test set: Add these cases to your evaluation corpus.

- Adjust weights/rules: Update weights or introduce new heuristics as needed.

This iterative loop ensures the system keeps improving and adapts to real user behavior.

Implementing on Azure: AI search and OpenAI embeddings

All of the above can be implemented effectively with Microsoft Azure.

Azure AI Search

Azure AI Search (formerly Azure Cognitive Search) provides:

- Hybrid search: Native support for combining full-text (BM25) and vector search.

- HNSW indexes: An efficient HNSW implementation for vector retrieval.

- Scoring profiles: A flexible framework for custom scoring functions.

- Text weights: Per-field weighting for full-text.

- Vector weights: Per-field weighting for vector embeddings.

Scoring profiles can combine:

- Magnitude scoring for numeric values (margin, popularity).

- Freshness scoring for temporal values (created/updated dates).

- Text weights for full-text fields.

- Vector weights for embedding fields.

- Aggregation functions to blend multiple scoring signals.

OpenAI embeddings

For embeddings, we use OpenAI models such as text-embedding-3-large:

- High-quality embeddings: Strong multilingual performance, including Czech.

- Consistent API: Straightforward integration with Azure AI Search.

- Scalability: Handles high request volumes.

Multilingual capability makes these embeddings particularly suitable for Czech and other smaller languages.

Integration

Azure AI Search can directly use OpenAI embeddings as a vectorizer, simplifying integration. Define vector fields in the index that automatically use OpenAI to generate embeddings during document indexing.

Microsoft Ignite 2025: The shift from AI experiments to enterprise-grade agents

1. AI agents move centre stage

Microsoft’s headline reveal, Agent 365, positions AI agents as the new operational layer of the digital workplace. It provides a central hub to register, monitor, secure, and coordinate agents across the organisation.

At the same time, Microsoft 365 Copilot introduced dedicated Word, Excel, and PowerPoint agents, capable of autonomously generating, restructuring, and analysing content based on business context.

Why this matters

Enterprises are shifting from “asking AI questions” to “assigning AI work”. Agent-based architectures will gradually replace many single-purpose assistants.

What organisations can do

- Identify workflows suitable for autonomous agents

- Standardise agent behaviour and permissions

- Start pilot deployments inside Microsoft 365 ecosystems

2. Integration and orchestration become non-negotiable

Microsoft emphasised interoperability through the Model Context Protocol (MCP). Agents across Teams, Microsoft 365, and third-party apps can now share context and execute coordinated multi-step workflows.

Why this matters

Real automation requires more than standalone copilots — it requires orchestration between tools, data sources, and departments.

What organisations can do

- Map cross-app workflows

- Connect productivity, CRM/ERP and operational platforms

- Design agent ecosystems rather than isolated assistants

3. Governance and security move into the spotlight

As agents gain autonomy, Microsoft introduced governance capabilities such as:

- visibility into permissions

- behavioural monitoring

- integration with Defender, Entra, and Purview

- centralised policy control

- data-loss prevention

Why this matters

AI at scale must be fully observable and compliant. Governance will become a foundational requirement for all agent deployments.

What organisations can do

- Define who is allowed to create/modify agents

- Establish audit and monitoring standards

- Build guardrails before rolling out automation

Read the official Microsoft article with all security updates & news - Link

4. Windows, Cloud PCs, and the rise of the AI-enabled workspace

Microsoft presented Windows 11 and Windows 365 as key components of the AI-first workplace. Features include:

- AI-enhanced Cloud PCs

- support for shared and frontline devices

- local agent inference on capable hardware

- endpoint-level automation

Why this matters

Distributed teams gain consistent, secure work environments with native AI capabilities.

What organisations can do

- Evaluate Cloud PC scenarios

- Modernise workplace setups for agent-driven workflows

- Explore AI-enabled devices for operational teams

5. AI infrastructure and Azure evolution

Ignite highlighted continued investment in Azure AI capabilities, including:

- improved model hosting and versioning

- hybrid CPU/GPU inference

- faster deployment pipelines

- more cost-efficient fine-tuning

- enhanced governance for AI training data

Full report here - Link

Why this matters

Scalable data pipelines and model infrastructure remain essential foundations for any agent-driven environment.

What organisations can do

- Update data architecture for AI-readiness

- Implement vector indexing and retrieval pipelines

- Optimise model hosting costs

6. Copilot Studio and plug-in ecosystem expand rapidly

Copilot Studio received major updates, transforming it into a central automation and integration hub. New capabilities include:

- custom agent creation with visual logic

- no-code multi-step workflows

- plug-ins for internal APIs and line-of-business systems

- improved grounding using enterprise data

- expanded connectors for CRM/ERP/event platforms

Why this matters

Organisations can build specialised copilots and agents — connected to their internal systems and business logic.

What organisations can do

- Develop domain-specific copilots

- Use connectors to integrate existing systems

- Leverage visual logic for quick experiments

7. Fabric + Azure AI integration

Microsoft Fabric now provides deeper AI readiness features:

- tight integration with Azure AI Studio

- automated pipelines for AI data preparation

- vector indexing and RAG capabilities inside OneLake

- enhanced lineage and governance

- performance boosts for large-scale analytics

Why this matters

AI agents depend on clean, governed, real-time data. Microsoft states that Fabric now enables building unified data + AI environments more efficiently.

What organisations can do

- Consolidate disparate data pipelines into Fabric

- Implement vector search for internal knowledge retrieval

- Build governed AI datasets with lineage tracking

What this means for companies

Across all announcements, one trend is consistent: AI is becoming an operational layer—not an add-on.

For organisations in finance, energy, logistics, retail, or event management, this brings clear implications:

- It’s time to move from experimentation to real deployment.

- Automated agents will replace many single-purpose copilots.

- Governance frameworks must be in place before scaling.

- Integration across apps, data sources, and workflows is essential.

- AI will increasingly live inside productivity tools employees already use.

- The competitive advantage will come from how well agents connect to business processes—not from which model is used.

BigHub is well-positioned to guide you with for this transition—through personalized strategy, architecture, implementation, and optimisation.

How enterprises should prepare for 2025–2026

Here are the next steps organisations should consider:

1. Map high-value workflows for agent automation

Identify repetitive, cross-team workflows where autonomous task execution delivers value.

2. Design your agent governance framework

Define roles, access boundaries, audit controls, and operational monitoring.

3. Prepare your data infrastructure

Ensure clean, accessible, governed data that agents can safely use.

4. Integrate your productivity tools

Leverage Teams, Microsoft 365, and MCP-compatible apps to reduce friction.

5. Start with a controlled pilot

Choose one business unit or workflow to test agent deployment under monitoring.

6. Plan for organisation-wide rollout

Once guardrails are validated, scale agents into more complex processes.

.webp)